How children make sense of the complex signals they hear in the classroom depends partly on their hearing and cognitive abilities. But newly published research shows more about the important part played by the acoustic environment in this process; specifically, the role of reverberation time.

The ability to make sense of what you are hearing when there are other talkers to ignore is an important skill that we use every day in a variety of contexts. But this has particular relevance for the field of education, where competing sounds from other learners in high reverberation time environments are a constant reality in almost all educational scenarios.

Recent changes in classroom design, pedagogical approaches as well as increasing use of technology have all contributed to producing a more dynamic educational environment. Different activities are occurring in the same spaces as teachers differentiate and democratize the educational process.

For example, in open-plan classrooms, multiple lessons are occurring simultaneously. And compared to adults, children are particularly vulnerable to distraction as well as sounds that mask what they are listening to. As such, any benefit we can produce by altering the environment becomes even more essential for lifting barriers to learning in educational spaces.

Groundbreaking research

And now, new research [1] published by The Journal of the Acoustical Society of America has found that improving room acoustics help children to deal with listening scenarios in which there is more than one person speaking simultaneously (download the full research paper here).

This is a valuable scientific contribution because although this subject has been investigated in adults, the authors have found no previous record of studies showing the benefits of improved acoustics helping children to cope better with challenging multi-talker listening situations.

From the cocktail party to the classroom

One of the first studies which looked into how we cope with multi-talker listening scenarios called it The Cocktail Party Problem [2] in reference to the fact that this skill is particularly useful in the multi-talker context of a party. However, this skill is obviously deployed in many different environments including the office and the classroom and not just over cocktails.

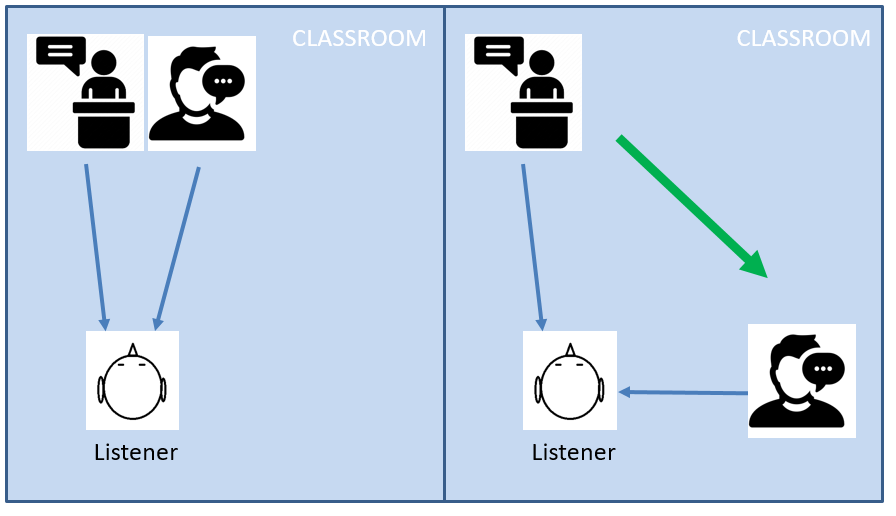

The ability itself (i.e. auditory stream segregation), is partly dependent on our ability to tell where a sound is coming from in space (i.e. localization). Once your brain identifies where the sound is coming from, it tunes in to the sound signal coming from that location much more easily.

Indispensable to this process of auditory streaming is the fact that most people have two ears which therefore provide “binaural” information about the sound environment [3]. For example, sounds arrive first at the ear closest to where the sound is coming from. Likewise, sounds are always loudest at the ear which is closest to the sound (usually the head gets in the way of the second ear, called a head-shadowing effect).

These minute binaural time and volume differences helps the brain to localize or place the sound in the room. Once that is achieved, the process of segregating that sound from competitors vying for the listener’s attention and ears can begin.

Results show that better room acoustics positively affect auditory stream segregation

How well the above-explained process is deployed in these multi-talker scenarios obviously depends on other contributing factors, for example, aspects relating to the voices involved (accent, prosody, loudness, etc.). What they are talking about also matters; if someone says your name from across a room then you are likely to tune in on that other signal regardless of just about anything [4].

But now it has been shown that the acoustic environment also plays a role.

Researchers measured how well children are able to hear a target sound when there was a competing talker under various conditions. Among the things they compared were the respective locations of the target and masking voices as well as the reverberation times (RT) in the simulated rooms in which the talkers were speaking; namely, RTs of 0.4 and 1.1 seconds.

They found that having a lower reverberation time was of clear benefit for children and adults’ speech perception, particularly when the voice they were listening to as well as the distracting voice were separated from one-another in space.

For 7-9 year old children, having a lower reverberation time benefited speech reception thresholds (SRTs) for 50% intelligibility by a statistically significant 2.8 dB. This figure increased to 4.6 dB in 10-12-year-old children. Meanwhile, adults experienced a similar benefit of 4.5 dB.

Are there real-world implications for reverberation time research?

In considering the applicability of this study to everyday listening scenarios, a high RT like the one used in this study is common in larger rooms such as those in which open-plan teaching happens. In Finland, for example, where such classrooms are in vogue, the recommendation for open-plan classrooms is from 0.9-1.1 seconds.

So this study should therefore serve as a warning for designers of open-plan classrooms to consider the impacts of the acoustic conditions they create on learners’ speech perception. And the message for standardization committees is that these recommendations leave room for improvement.

On the other hand, the low RT condition of 0.4 seconds was quite close to many classroom recommendations for ordinary sized classrooms (e.g. ANSI [USA] = 0.6 s, BB93 [UK] = 0.6 s; SS 25268 [Sweden] = 0.5 s). This difference of 0.1-0.2 seconds is not large enough to expect a significant reduction in the effect when compared with the high reverberation time (1.1 s) condition used in the study. So the predicted improvement can be expected in rooms that move from high to substantially lower RT conditions that are in line with existing standards.

Therefore, this experiment indicates that children stand to gain up to 4.6 dB in speech perception in a multi-talker scenario when acoustics are improved in realistic ways, with similar improvements for adults. This has obvious consequences for the acoustic design of learning spaces; namely, to follow the example of existing standards and reduce reverberation times in any room in which communication is an essential part of the learning process.

In this way, we hope that learning environments can become almost as fun as a cocktail party.

Sources

[1] Peng, Z. E., Pausch, F., & Fels, J. (2021). Spatial release from masking in reverberation for school-age children. The Journal of the Acoustical Society of America, 150(5), 3263-3274.

[2] Cherry E. C. (1953). Some experiments on the recognition of speech, with one and two ears. Journal of the Acoustical Society of America, 25, 975-979.

[3] Bronkhorst, A. W., & Plomp, R. (1988). The effect of head‐induced interaural time and level differences on speech intelligibility in noise. The Journal of the Acoustical Society of America, 83(4), 1508-1516.

[4] Wood, N., & Cowan, N. (1995). The cocktail party phenomenon revisited: how frequent are attention shifts to one’s name in an irrelevant auditory channel?. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(1), 255.